What is an algorithm?

An algorithm is a finite sequence of well-defined, computer-implementable instructions, typically to solve a class of problems or to perform a computation. Algorithms are unambiguous specifications for performing the calculation, data processing, automated reasoning, and other tasks.

Analysis of algorithm

After designing an algorithm, it has to be checked and its correctness needs to be predicted; this is done by analyzing the algorithm. The algorithm can be analyzed by tracing all step-by-step instructions, reading the algorithm for logical correctness, and testing it on some data using mathematical techniques to prove it correct. Another type of analysis is to analyze the simplicity of the algorithm. That is, simply design the algorithm so that it becomes easier to be implemented. However, the most straightforward way of solving a problem may not be sometimes the best one. Moreover, there may be more than one algorithm to solve a problem. The choice of a particular algorithm depends on the following performance analysis and measurements :

- Space Complexity

- Time Complexity

The time used by a computer to solve a problem using the algorithm, when input values are of a specified size. There are three types of time complexity:

- Best case — the minimum possible time depending on the output (at least)

- Worst case — the maximum possible time depending on the output (at most)

- Average case — the average time required to complete the execution

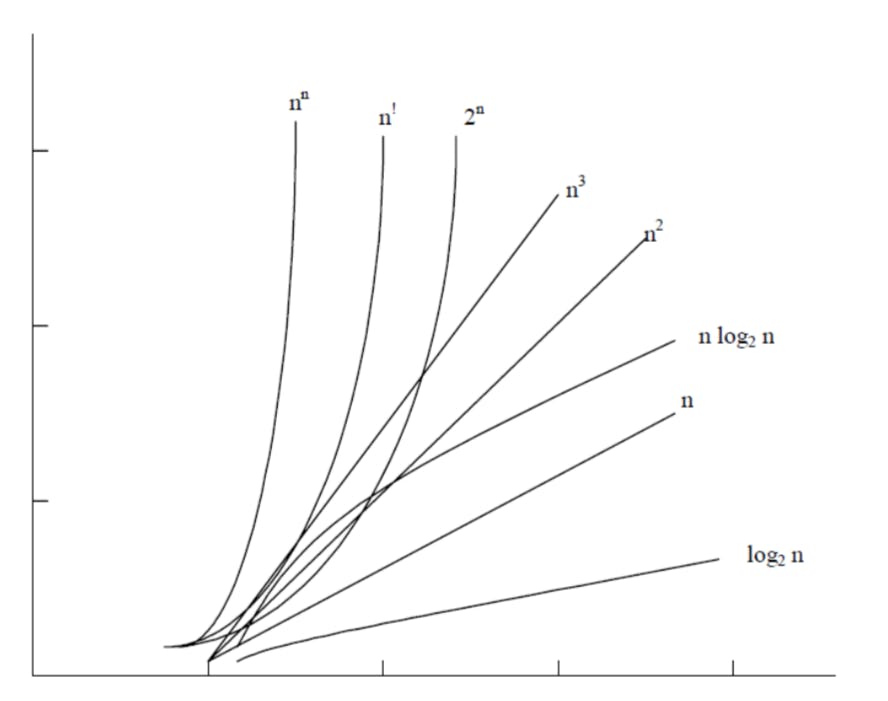

Some common time complexities are listed below:

- Constant Time

A simple statement that takes only a constant time. For example variable declaration, displaying the menu, etc. takes only constant time. The order of growth is considered as 1.

- Linear Time

When the time taken by the code segment is proportional to n.This happens when a particular code block is repeated n no: of times. The order of growth is considered as n. Eg:-

int i;

for(i=1;i<=n;i++)

print("%d",i);

- Logarithmic Time

It usually occurs when you are dividing something into two(binary search, binary trees). The order of growth is considered as log N. Eg:-

"""Code for binary search a represents the array and s represents the search element"""

int start=0,end=9;

while(start<=end)

mid=(start+end)/2

if(a\[mid\]==s)

print("Element is found");

else if(a\[mid\]<s)

start=mid+1

else

end=mid-1

- Polynomial Time

The order of growth is a polynomial. It occurs in the case of nested loops.

//O(n^3)

for(i=1;i<n;i++)

for(j=1;j<n;j++)

for(k=1;k<n;k++)

print(k)

- Exponential Time

The order of growth is 2^N.

The function that involves ’n’ as an exponent, i.e., 2n, nn, n ! is called exponential functions, which is too slow except for small size input function where growth is less than or equal to n c,(where ‘c’ is a constant) i.e.; n3, n2, nlog2n, n, log2n are said to be polynomial. Algorithms with polynomial-time can solve reasonable-sized problems if the constant in the exponent is small.

When we analyze an algorithm it depends on the input data, there are three cases :

- Best case

- Average case

- Worst case

In the best case, the amount of time a program might be expected to take on the best possible input data. In the average case, the amount of time a program might be expected to take on typical (or average) input data. In the worst case, the amount of time a program would take on the worst possible input configuration.

Asymptotic Notations

Asymptotic Notations are languages that allow us to analyze an algorithm’s running time by identifying its behavior as the input size for the algorithm increases. This is also known as an algorithm’s growth rate.

The following 3 asymptotic notations are mostly used to represent the time complexity of algorithms:

Big Oh (O)

Big Oh is often used to describe the worst-case of an algorithm by taking the highest order of a polynomial function and ignoring all the constants' values since they aren’t too influential for sufficiently large input.

Big Omega (Ω)

Big Omega is the opposite of Big Oh, if Big Oh was used to describe the upper bound (worst-case) of an asymptotic function, Big Omega is used to describe the lower bound of an asymptotic function. In an analysis algorithm, this notation is usually used to describe the complexity of an algorithm in the best case, which means the algorithm will not be better than its best-case.

Big Theta (Θ)

When an algorithm has complexity with lower bound = upper bound, say that an algorithm has a complexity O(n log n) and Ω(n log n), it has the complexity Θ(n log n), which means the running time of that algorithm always falls in n log n in the best-case and worst-case.

SPACE COMPLEXITY

Analysis of space complexity of an algorithm or program is the amount of memory it needs to run to completion.

Some of the reasons for studying space complexity are:

- If the program is to run on a multi-user system, it may be required to specify the amount of memory to be allocated to the program.

- We may be interested to know in advance whether sufficient memory is

available to run the program. - There may be several possible solutions with different space requirements.

- Can be used to estimate the size of the largest problem that a program can solve.

The space needed by a program consists of the following components.

- Instruction space: Space needed to store the executable version of the program and it is fixed.

- Data space: Space needed to store all constants, variable values and has further two components :

- Space is needed by constants and simple variables. This space is fixed.

- Space is needed by fixed-sized structural variables, such as arrays and structures.

Dynamically allocated space. This space usually varies.

Environment stack space: This space is needed to store the information to resume the suspended (partially completed) functions. Each time a function is invoked the following data is saved on the environment stack :

- Return address: i.e., from where it has to resume after completion of the

called function. - Values of all lead variables and the values of formal parameters in the function being invoked.

The amount of space needed by the recursive function is called the recursion stack space. For each recursive function, this space depends on the space needed by the local variables and the formal parameter. In addition, this space depends on the maximum depth of the recursion i.e., the maximum number of nested recursive calls.

TIME-SPACE TRADE-OFF

There may be more than one approach (or algorithm) to solve a problem. The best algorithm (or program) to solve a given problem requires less space in memory and takes less time to complete its execution. But in practice, it is not always possible to achieve both of these objectives. One algorithm may require more space but less time to complete its execution while the other algorithm requires less time-space but takes more time to complete its execution.

Thus, we may have to sacrifice one at the cost of the other. If the space is our constraint, then we have to choose a program that requires less space at the cost of more execution time. On the other hand, if time is our constraint such as in a real-time system, we have to choose a program that takes less time to complete its execution at the cost of more space.